Implemented a single-node Kubernetes cluster locally using Docker Desktop and containerized an application built with Express.js and Node.js. Deployed the application using two distinct approaches: monolithic and microservices architectures. Conducted load testing with the Locust framework to evaluate pod auto-scaling behaviour based on varying load levels. Demonstrated the advantages of the microservices approach, where only the specific service under high demand scaled up, eliminating the need to scale the entire application.

Hi guys, hope you are doing well. I am learning and writing this article simultaneously, so please take this with a grain of salt.

Youtube video showing the comparison of monolithic vs. microservices architecture, along with horizontal pod auto-scaling with load testing demo.

1. Motivation for project:-

A few days back I was watching the Olympics on Jio cinema, and I saw 4.5 Crore live users, and in the evening it was around 20 lakh live users.

Peak time

Late night time

From this I got curious about how they might be scaling up or down depending upon the load, to optimize the service and cost-cutting, and then I read more about how it’s done using Kubernetes and docker.

2. Tech stack:-

| Technology | Description |

|---|---|

| Server application | Javascript, NodeJS, Express |

| Containerization | Docker, Docker Image, Dockerhub |

| Kubernetes Cluster | Kubernetes in Docker Desktop [Create single node, local k8 cluster] |

| Load Testing | Locust framework in Python |

| k8 Services | Loadbalancer, NodePort, Deployment, Pods, Horizontal Auto Scaling |

| Kubernetes Dashboard | keep track of pods and resource utilization |

| kubectl | command line tool to interact with k8 cluster |

| Term | Description |

|---|---|

| Docker | A tool used to create and manage a container. Think of a container similar to a VM with everything needed for the code to run and it is isolated from the host machine. |

| Docker Image | After a container is created, we take a snapshot of the running instance of the container, known as a docker image, which is often uploaded to Dockerhub. The image can be used to create a running instance/docker container from it. |

| Kubernetes | A tool that pulls the image from Dockerhub and can create new containers or delete the running containers depending upon the load. |

| Pods | Smallest unit which can be deployed in k8. A pod can have multiple containers. |

| Cluster | There is a master node and multiple worker nodes. The master node is the brain, which manages the load between the workers. The cluster can have pods spread across multiple different nodes. |

| Node | A simple VM or physical computer. |

Hierarchy in easy terms:

Cluster > Node > Pod > Container

To run Kubernetes locally we use the feature which is inbuilt docker desktop and can be enabled in the settings.

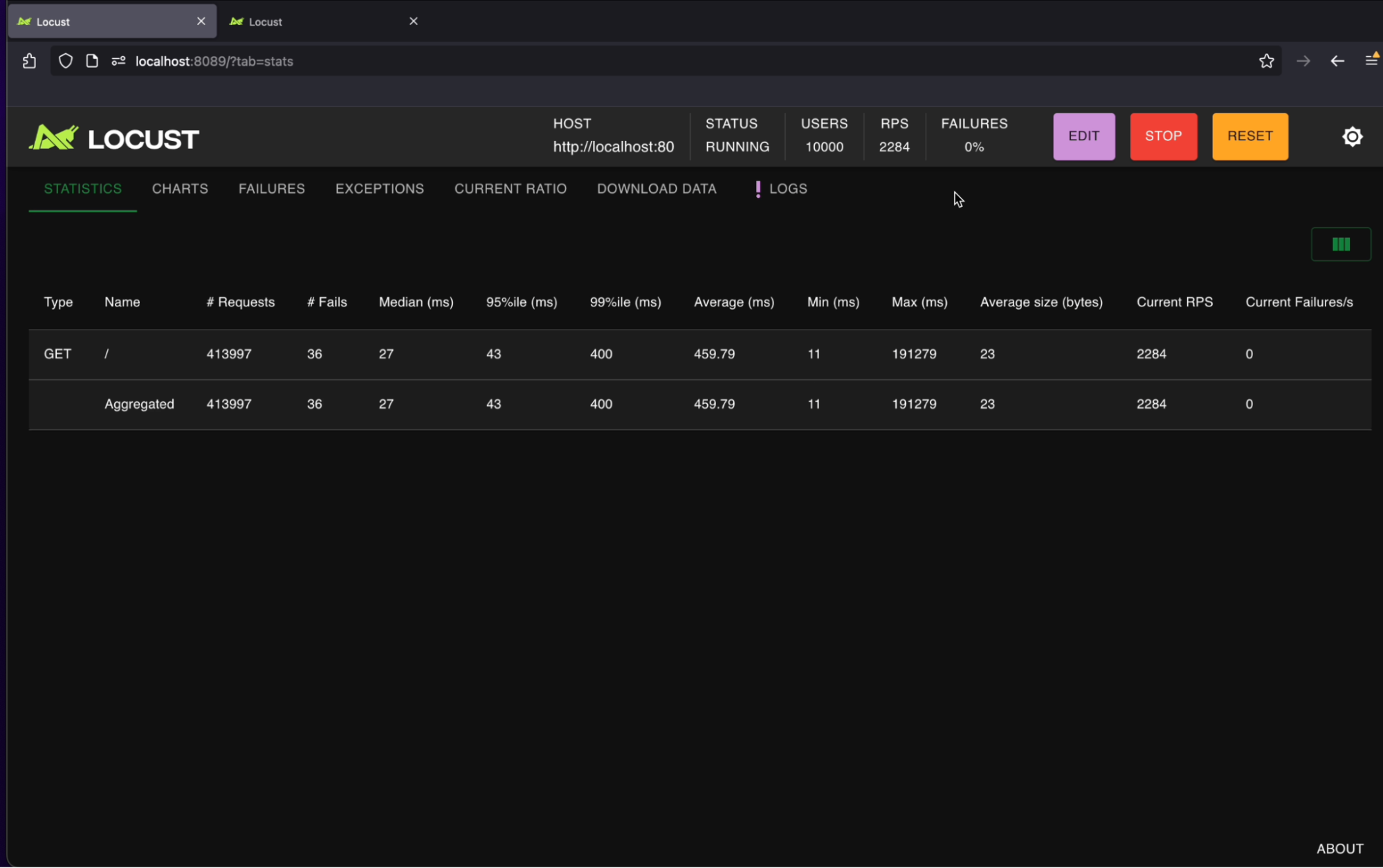

To create load on an API endpoint we use a tool called locust. This shows us if the k8s is working properly and if scaling correctly.

Kubernetes Dashboard

Locust Framework

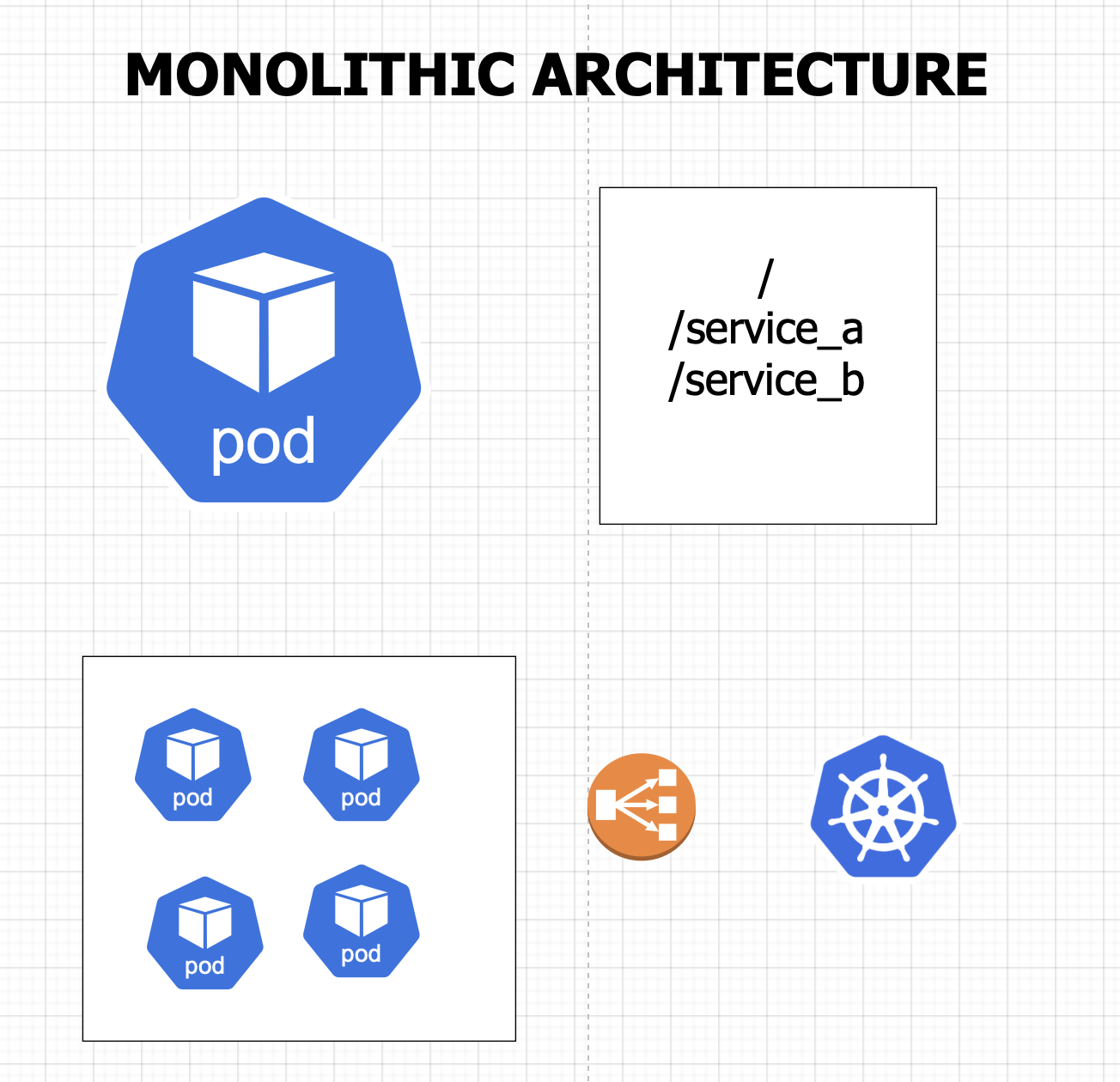

3. Monolithic architecture:-

3.1. Setup explanation:-

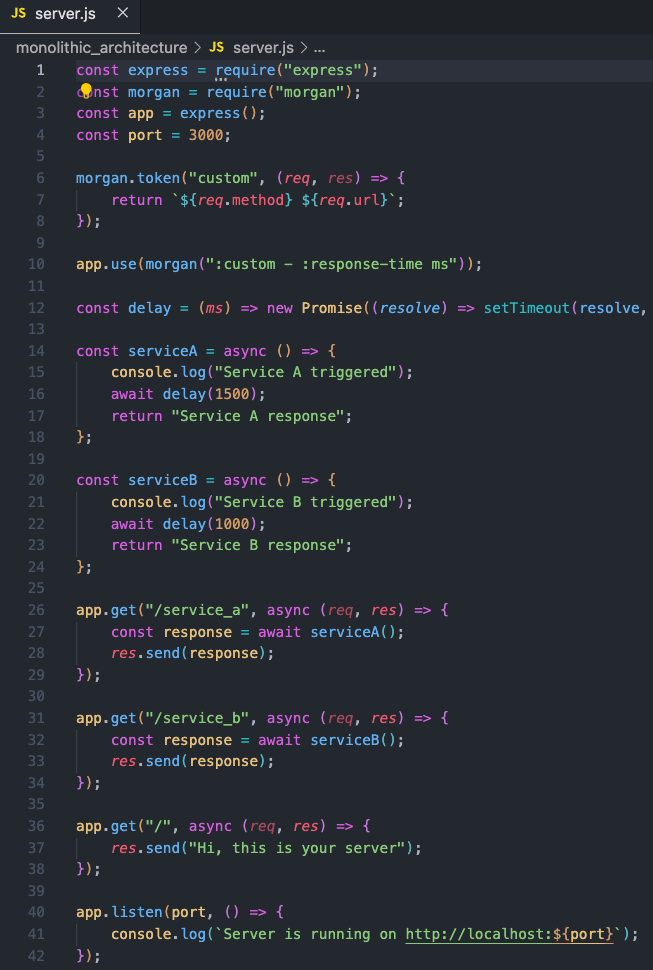

In this practice, what we do is put all the software in one single container, this is really easy to setup, but it has a few drawbacks.

Packing the full application in single pod

We have 3 endpoints defined here and some dummy service which is triggered after a request is made to an endpoint.

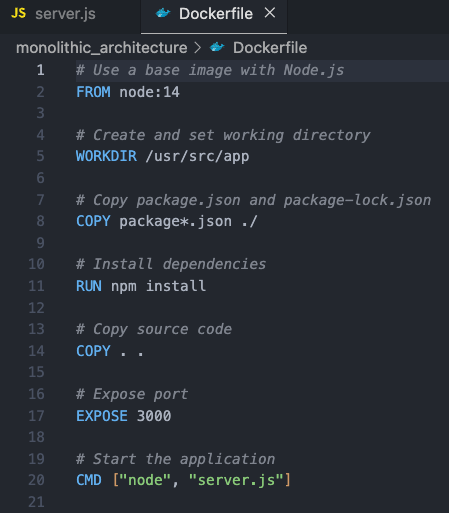

This is the dockerfile. And we create a docker image of this software and push it to docker hub.

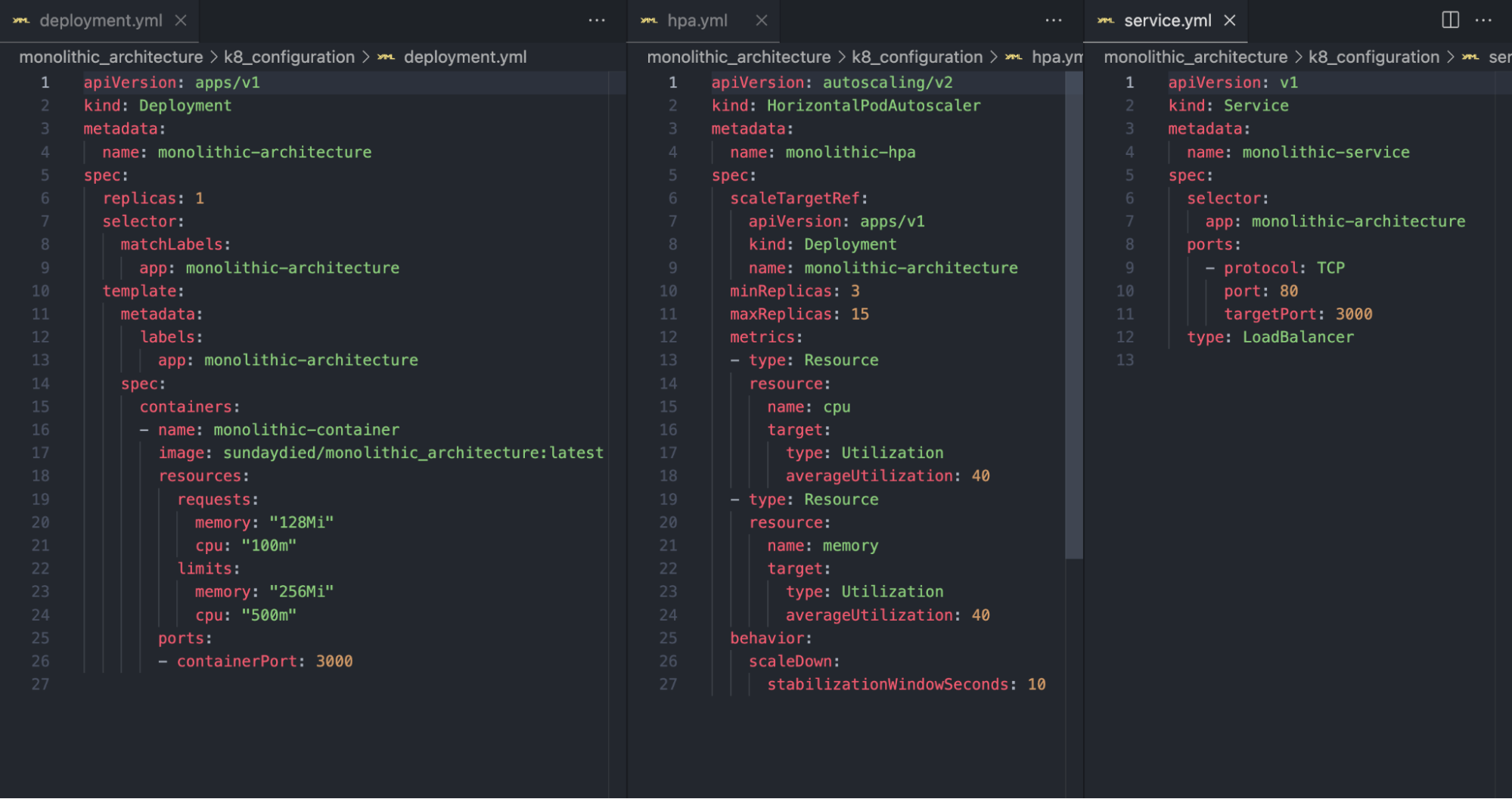

Kubernetes configuration files

Kubernetes configuration files:

- deployment.yml: Defines the structure of an individual pod, including its port details, resource requirements, and limits.

- hpa.yml: Specifies the minimum number of replicas and provides scaling instructions based on usage.

- service.yml: Acts as a Load Balancer, distributing the load evenly to prevent overwhelming a single pod and improving performance.

Now the problem with this is, if the load at a particular endpoint increases then multiple containers would spin up, even though traffic is only being directed at a specific endpoint.

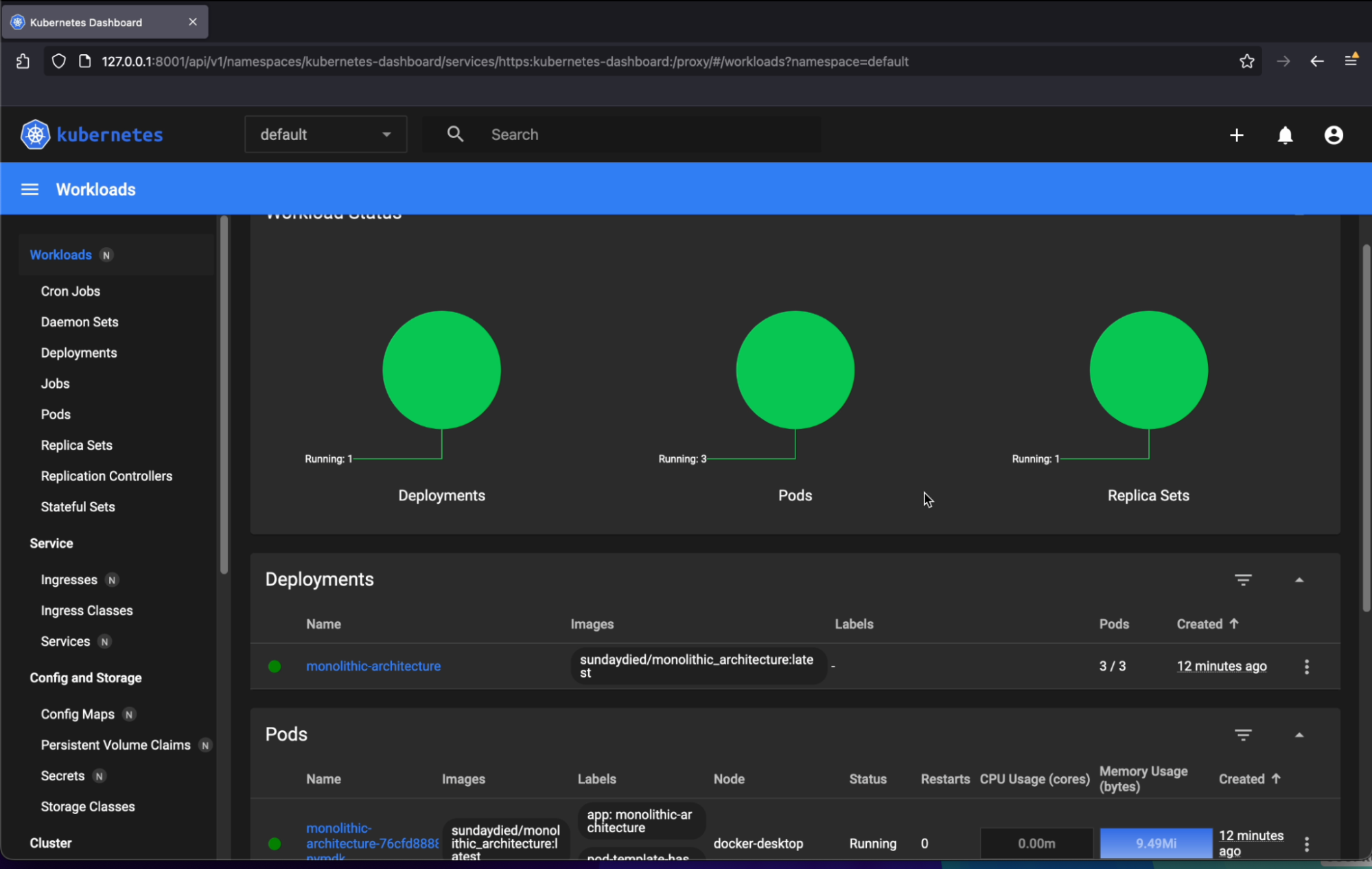

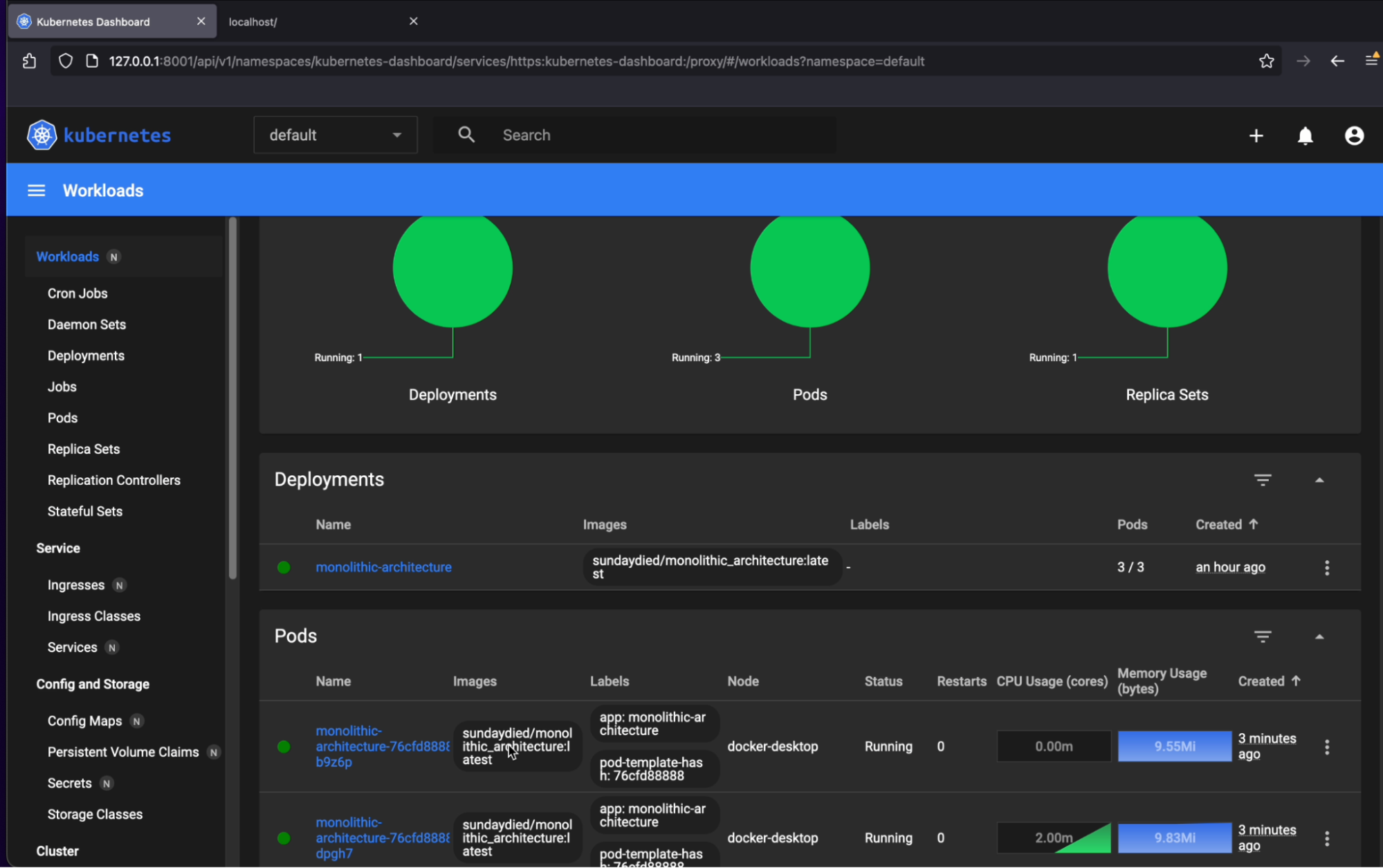

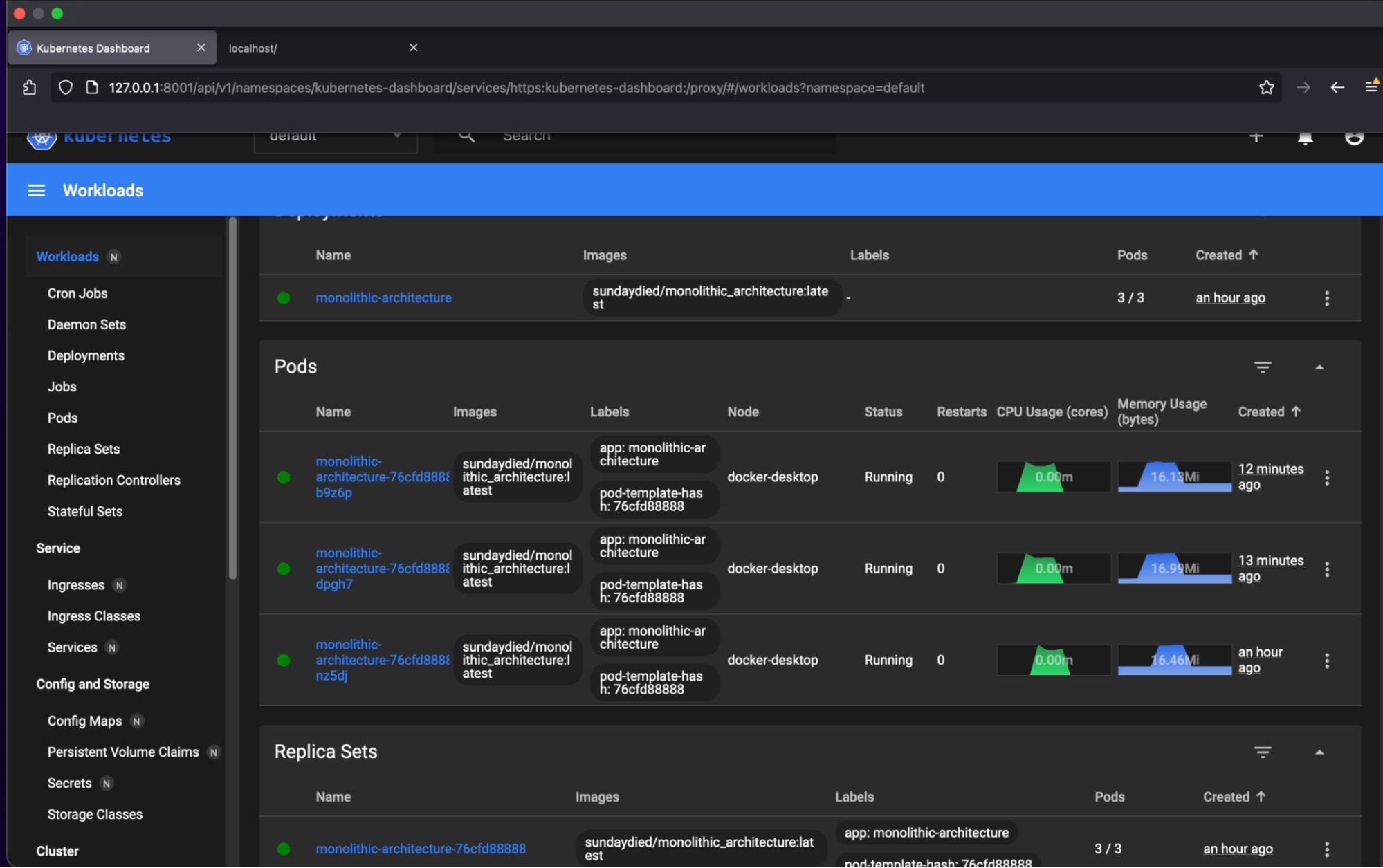

3.2. Initial state: before loading test

We have 3 replicas of our application

k8 Dashboard: Initial State

k8 terminal: Initial State

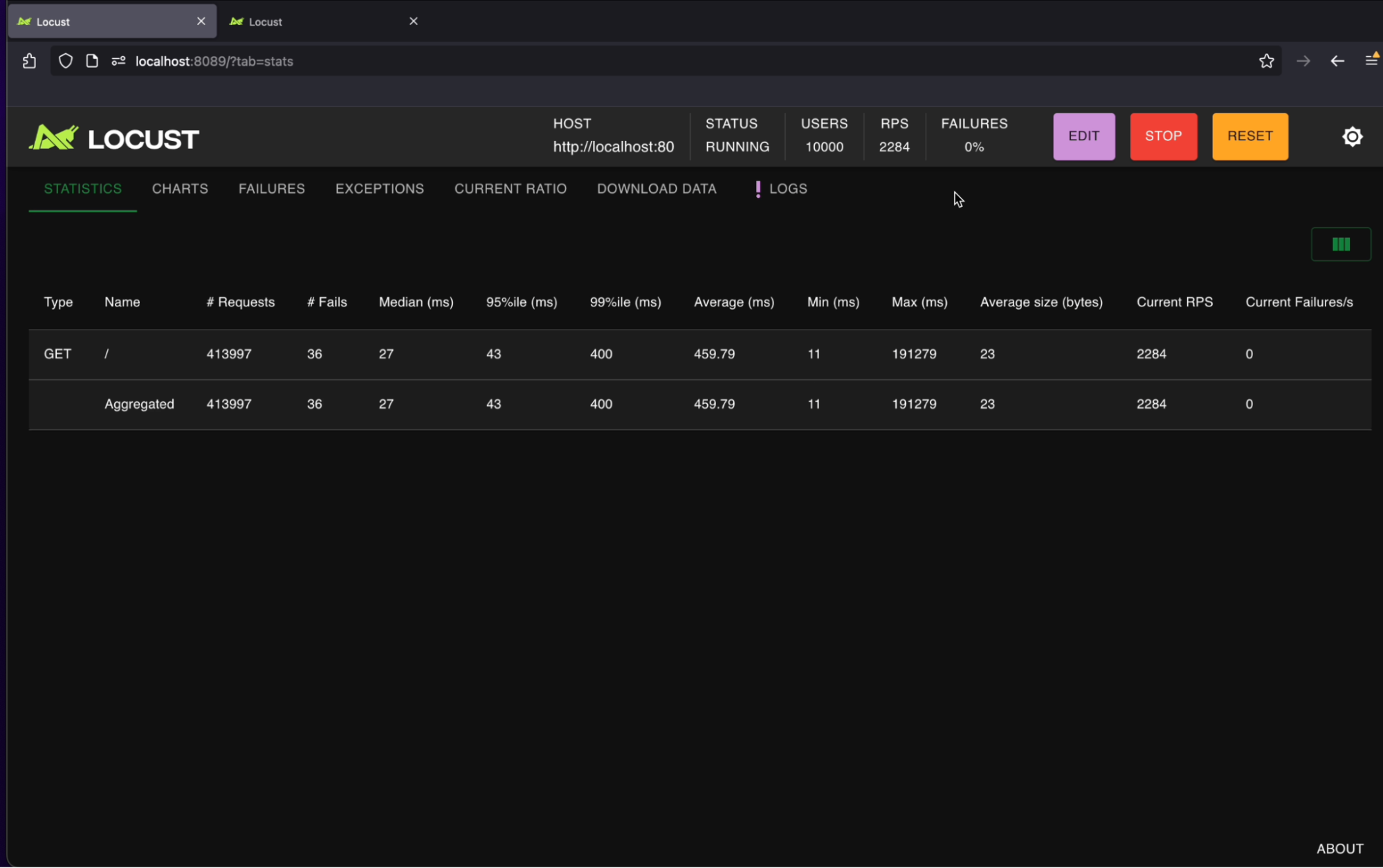

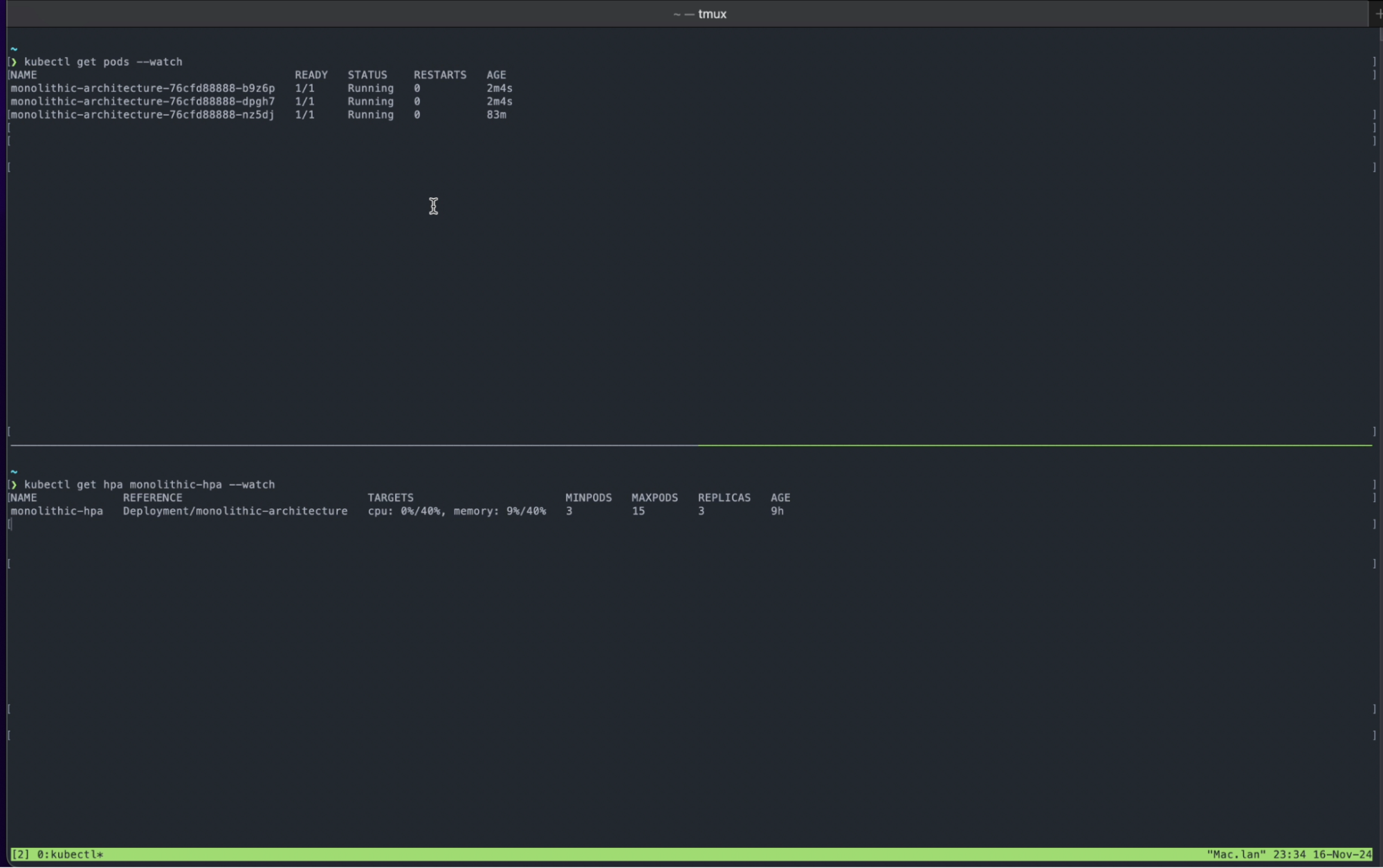

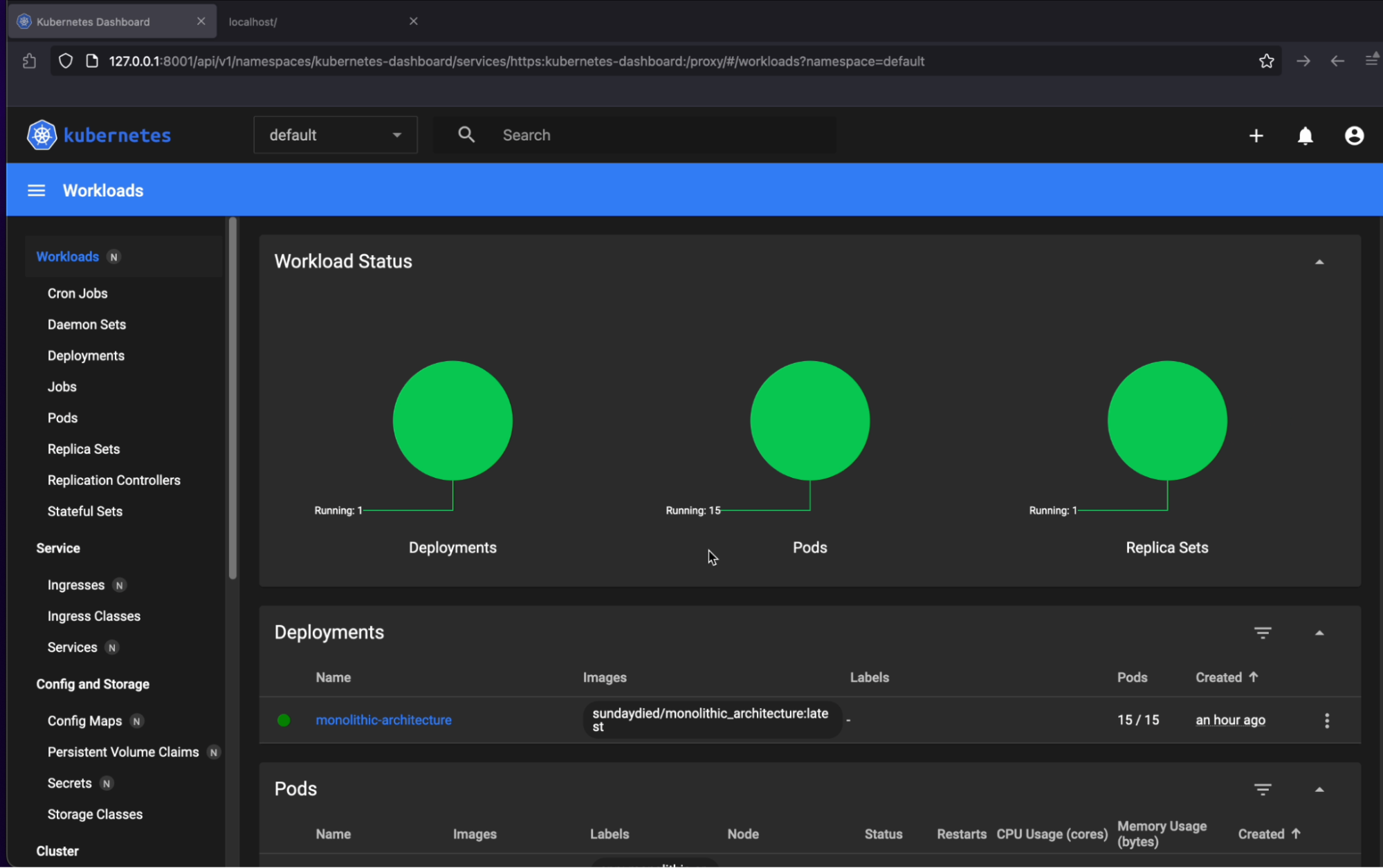

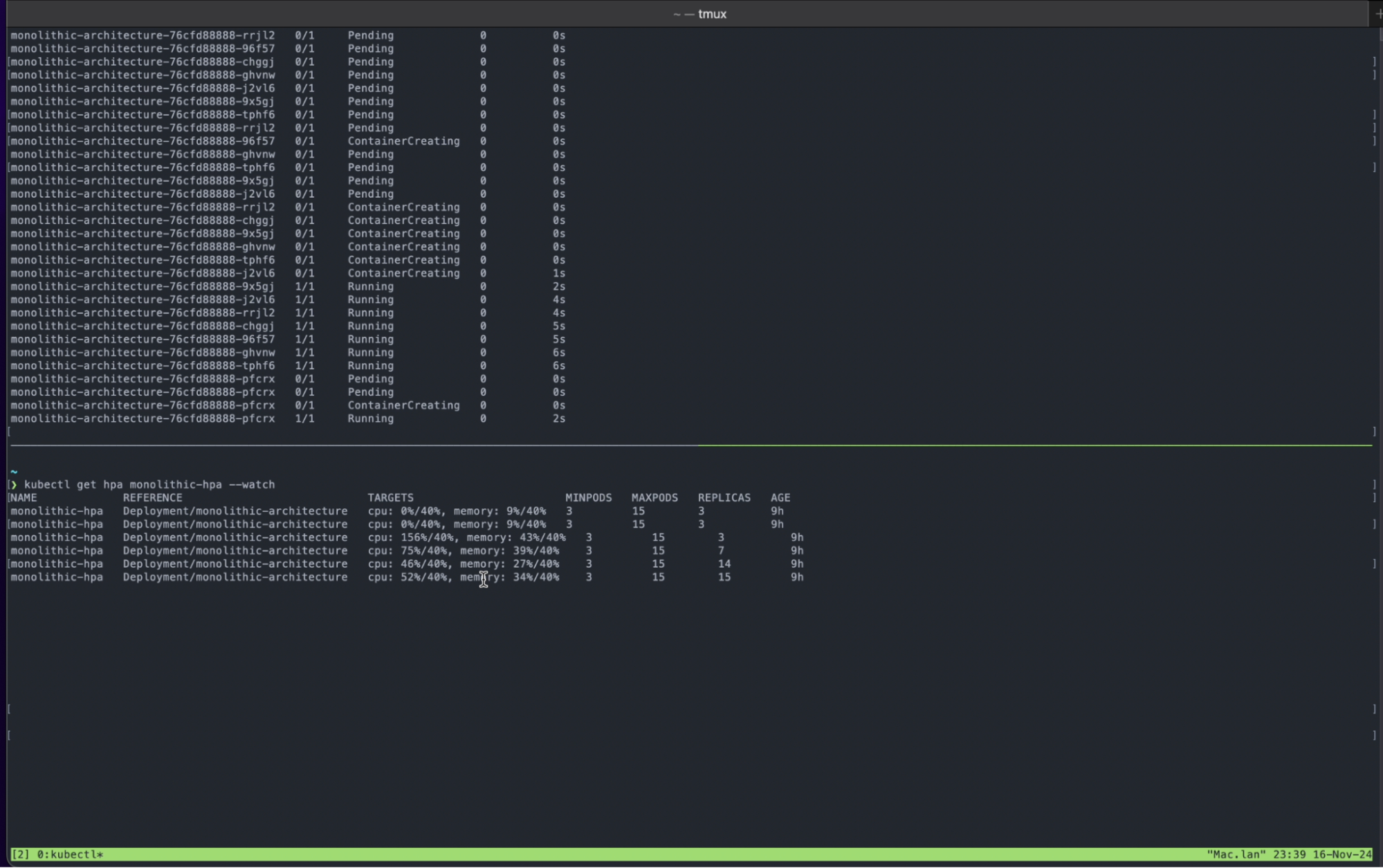

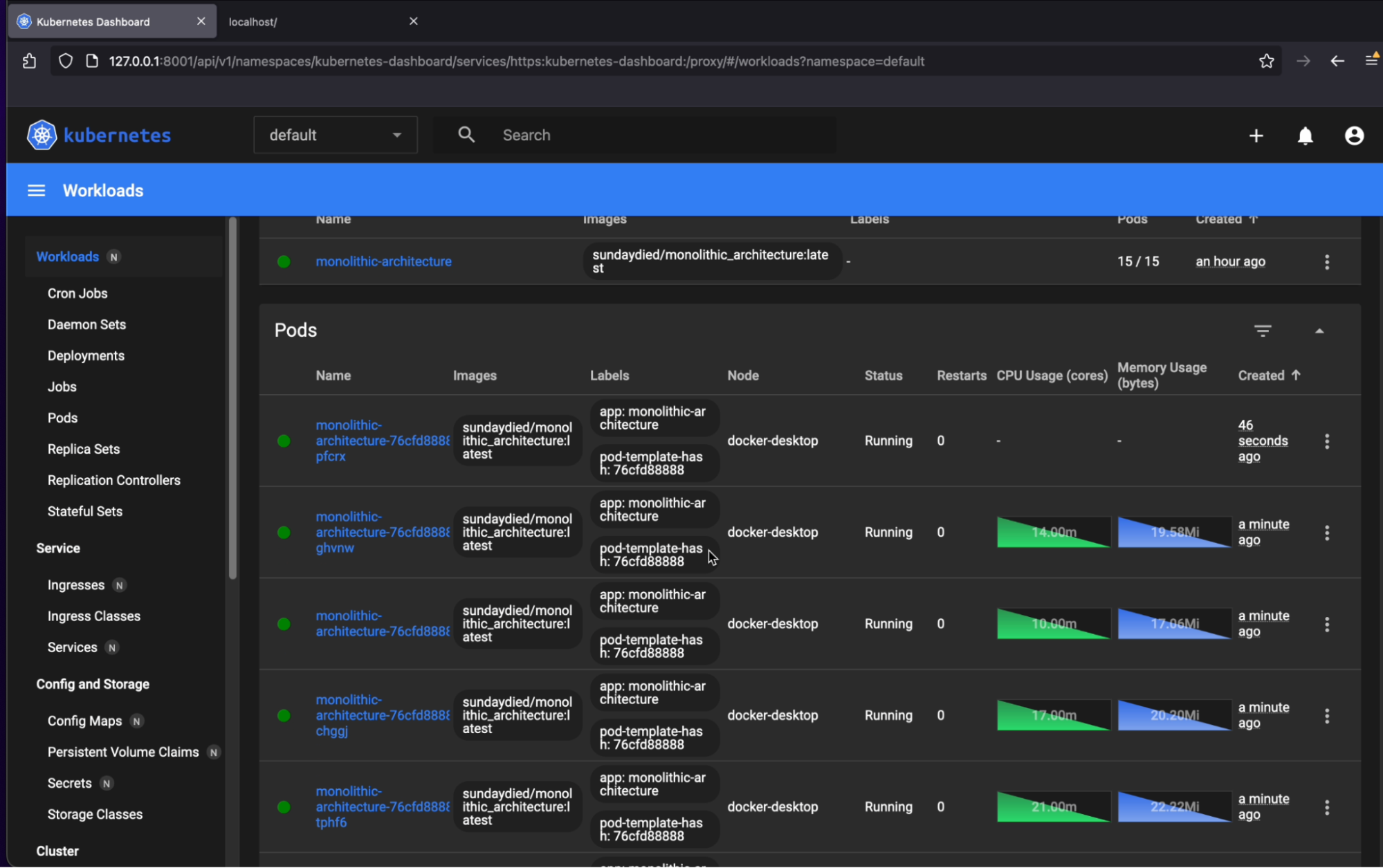

3.3. Stress state: during loading test

Starting Load Test

Even tho only the load is increasing at a particular service amongst (/, /service_a, /service_b), our full application would be scaled up.

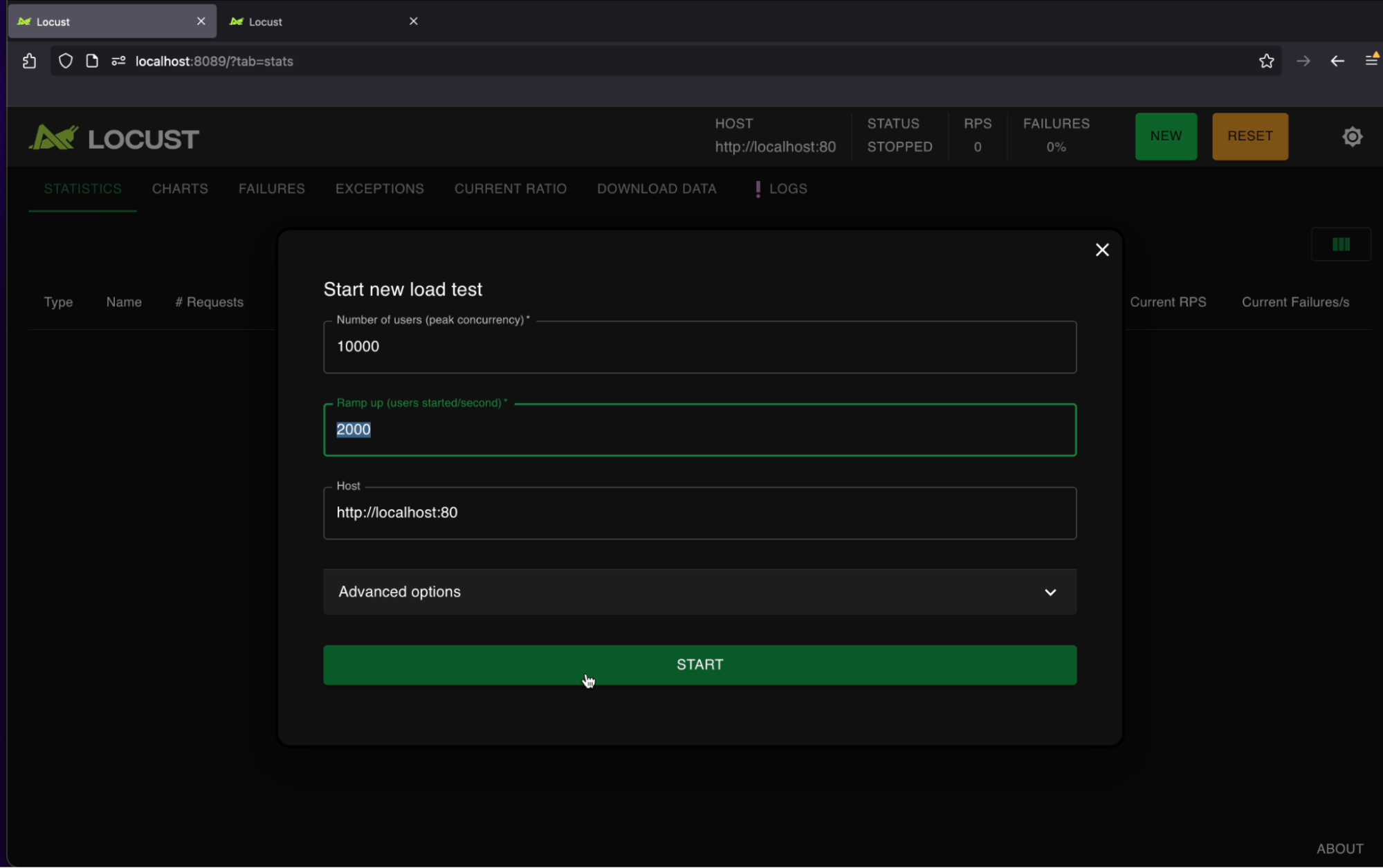

Load at its peak (10k user per second)

All 15 are pods up and running.

k8 Dashboard: Stress State

k8 Terminal: Stress State

Load balancer makes sure to distribute the load as you can see in the picture

This is very easy to set up, but however, comes with a big drawback and can be quite expensive in some cases.

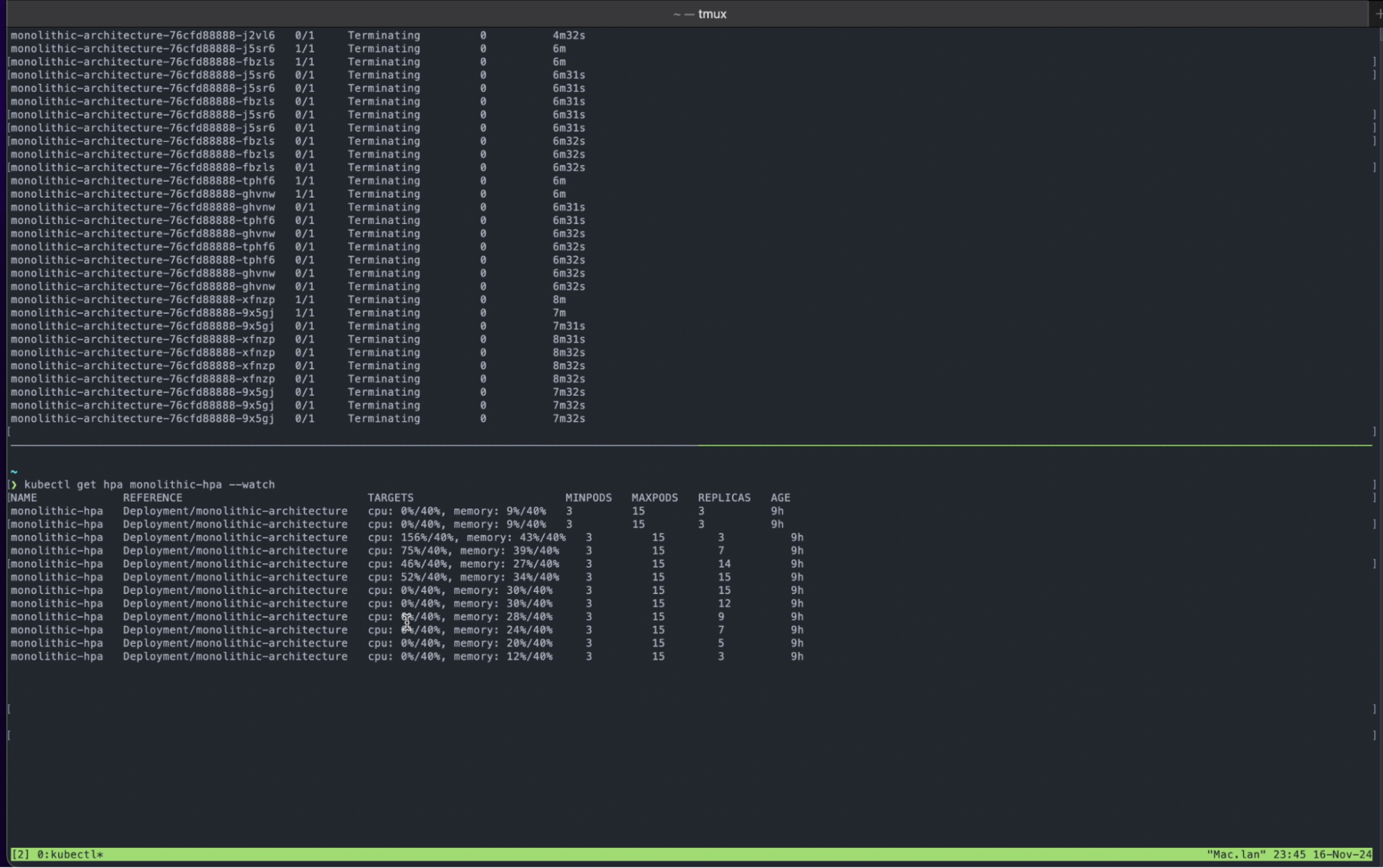

3.4 Final state: after loading test

Kubernetes scaling down the application.

k8 Dashboard: Final State

k8 Terminal: Final State

Excellant performance under high load, 0 failure rate

Locust report result

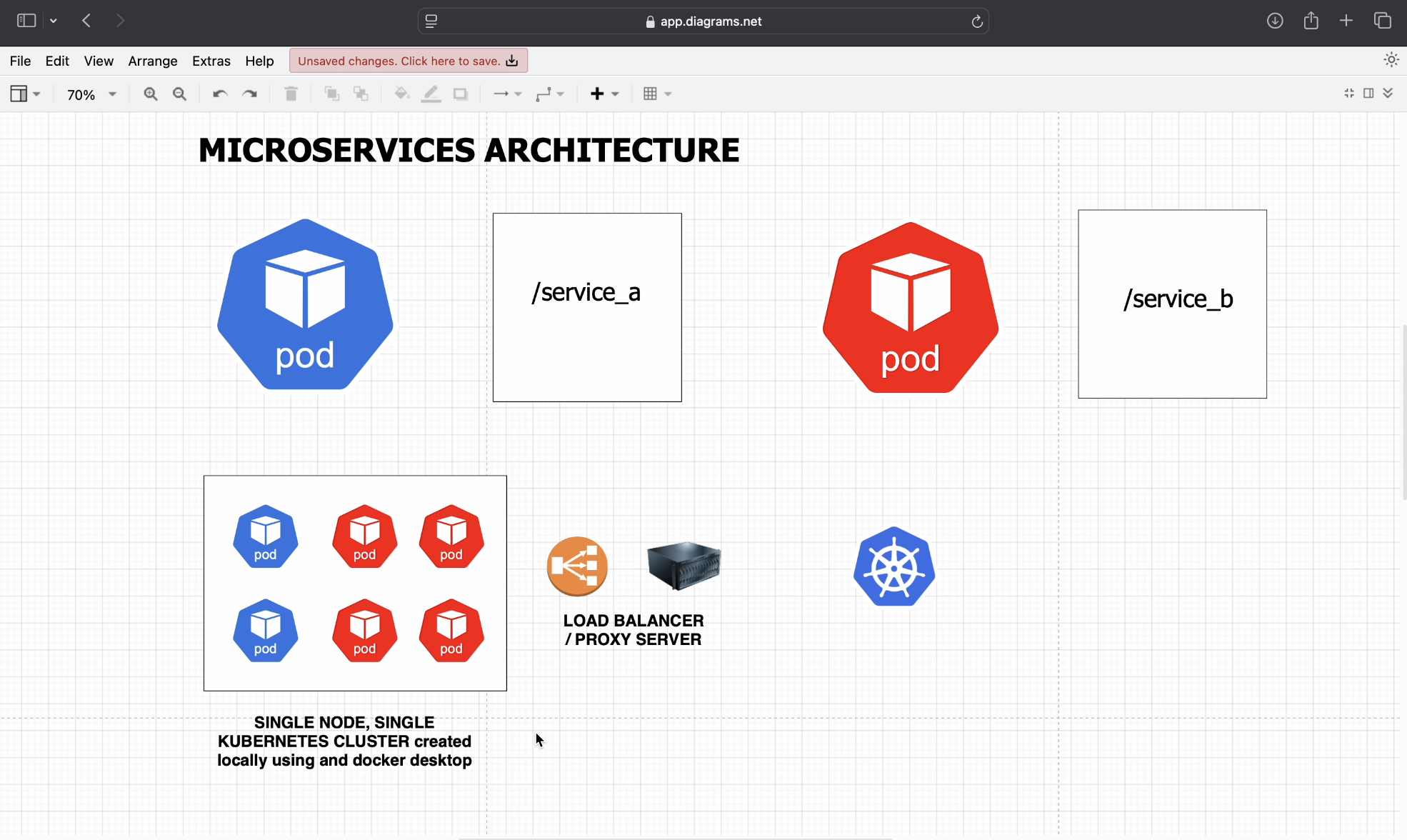

4. Microservices architecture:-

4.1. Setup explanation:-

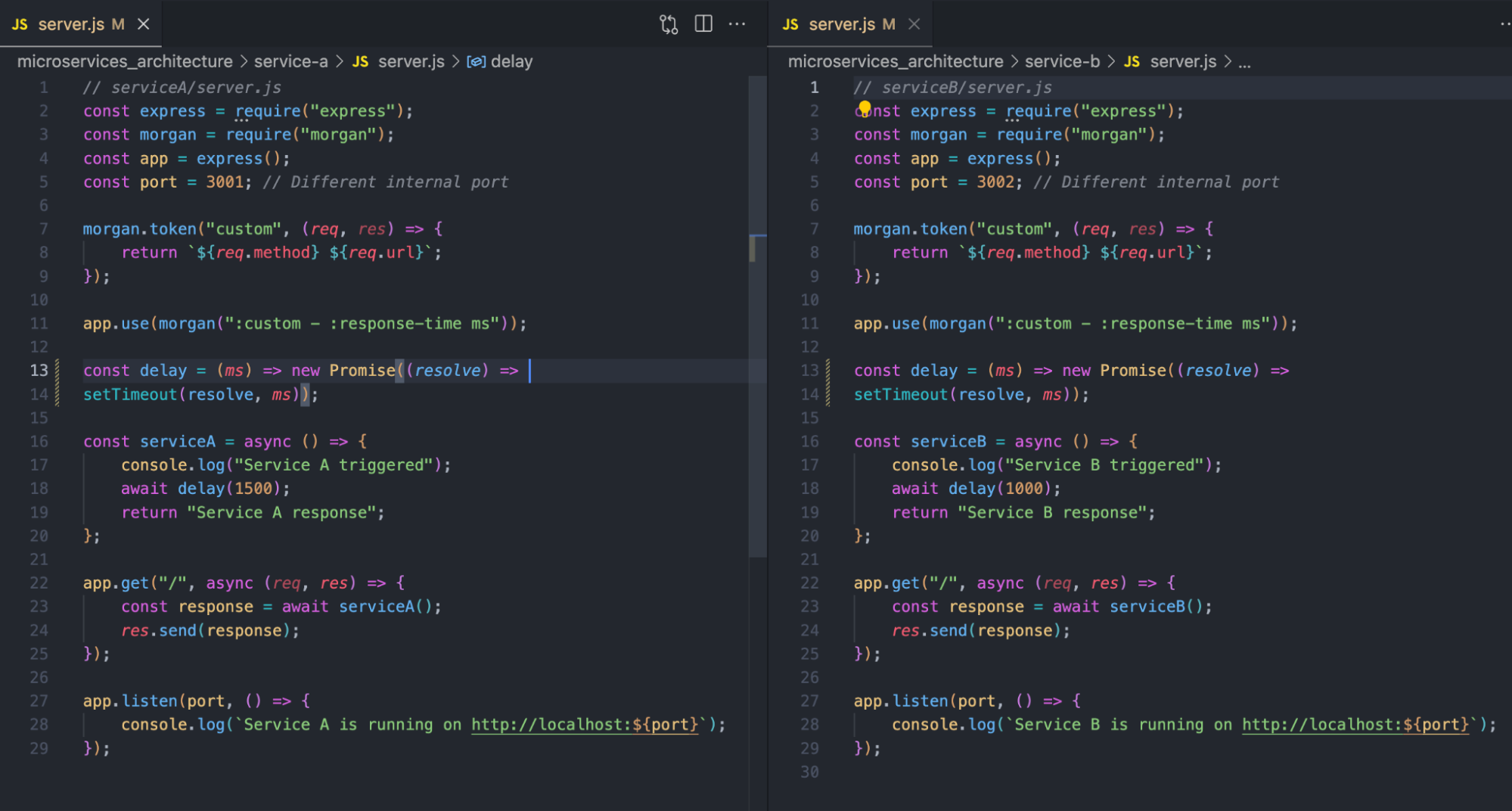

Decoupling tightly packed services in the monolithic architecture and giving each its own express server, and packing it in its own pod.

Packing the microservices in its own pod

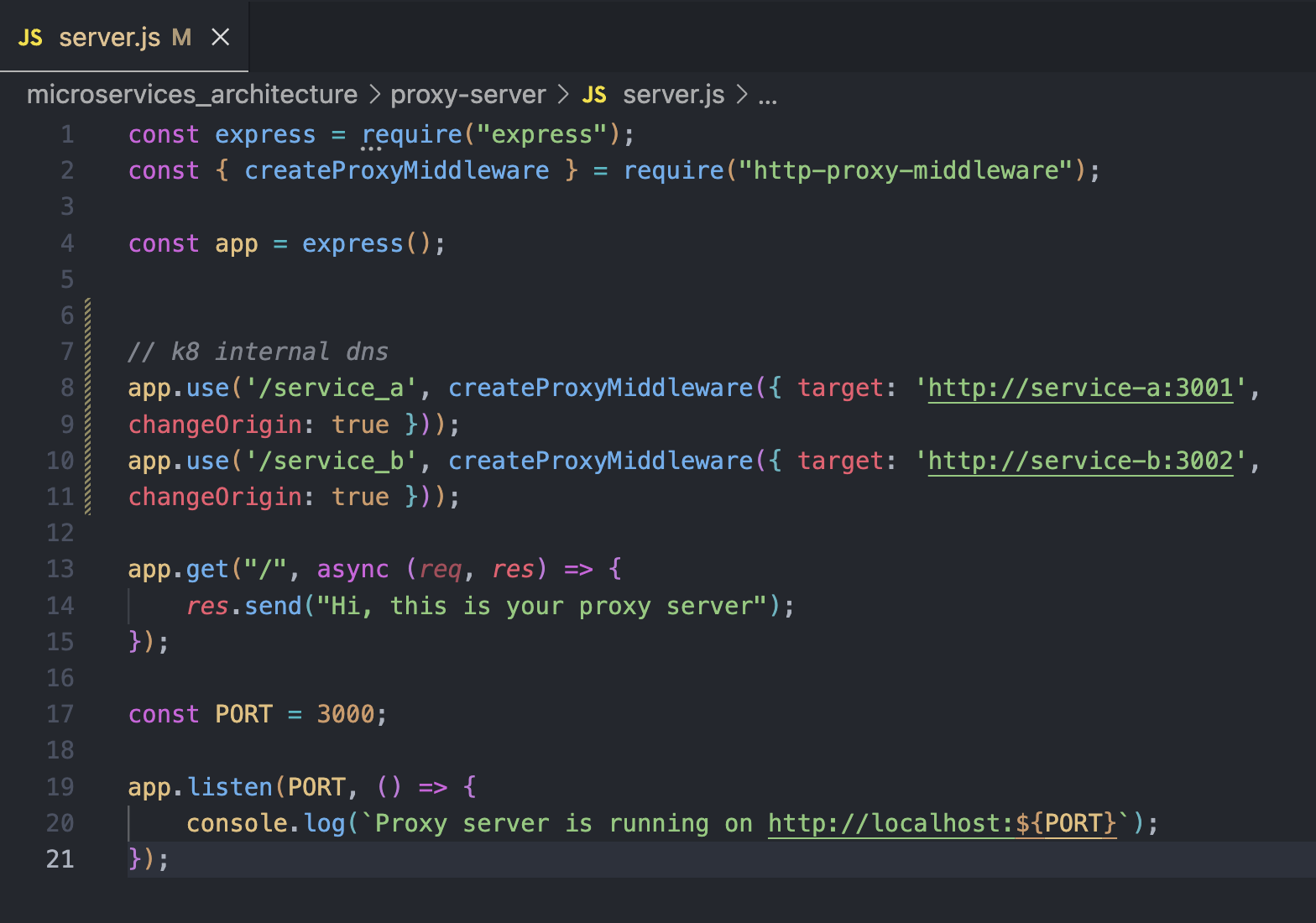

Also, set up a proxy server which would redirect the request to the correct pod service A or B. This proxy server also acts as load balancer, that is distributes the load for service B amongst the pod B replicas improving performance .

Giving proxy server its own express server

Service A and B its own express server

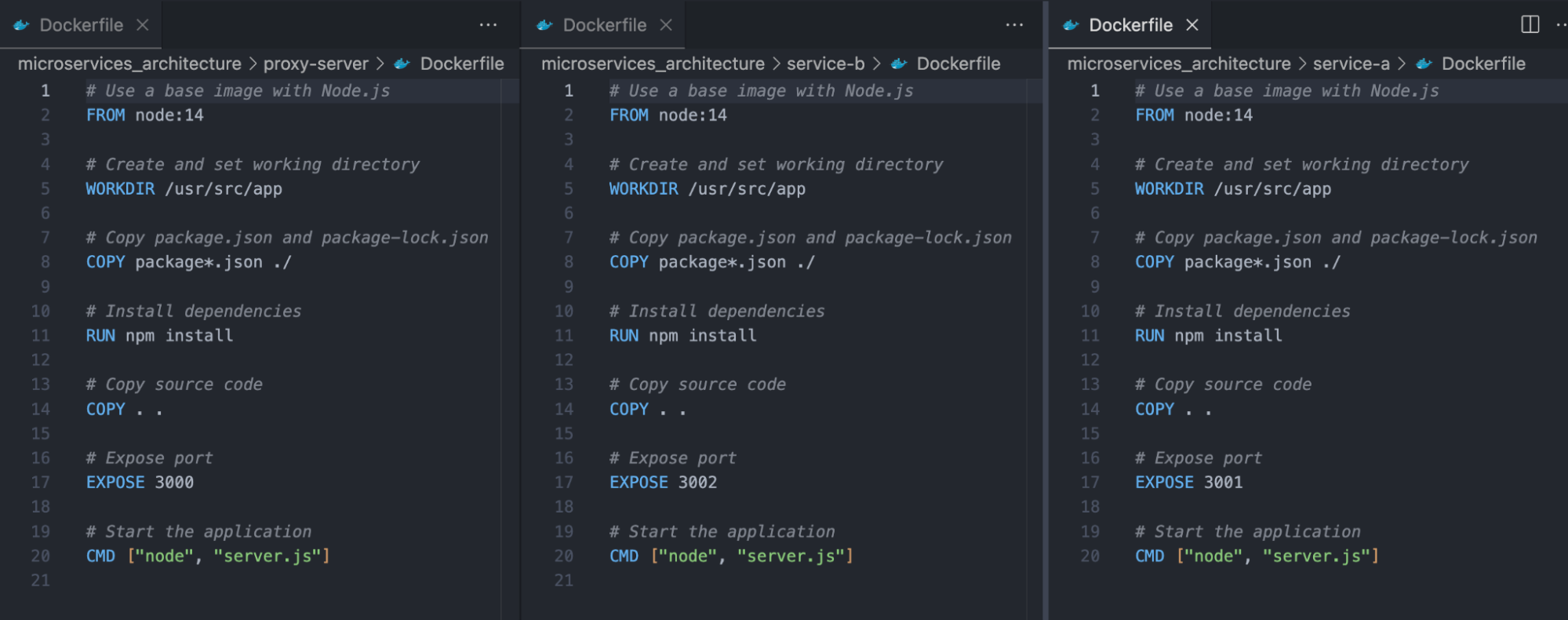

Dockerzing all the express server and building its image and publishing on Dockerhub

Hpa and proxy server which also acts as a loadbalancer

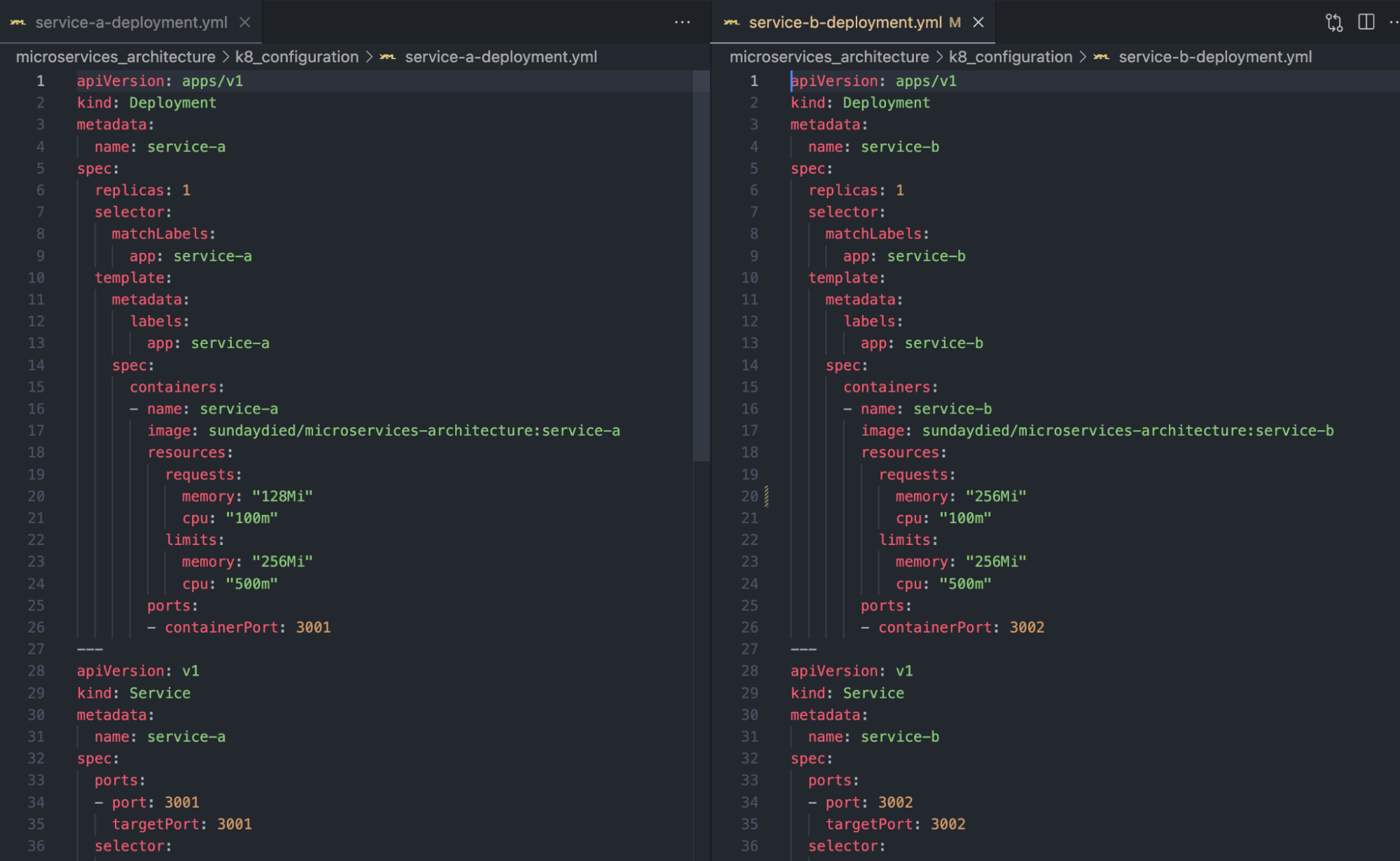

Deployment configuration for individual service

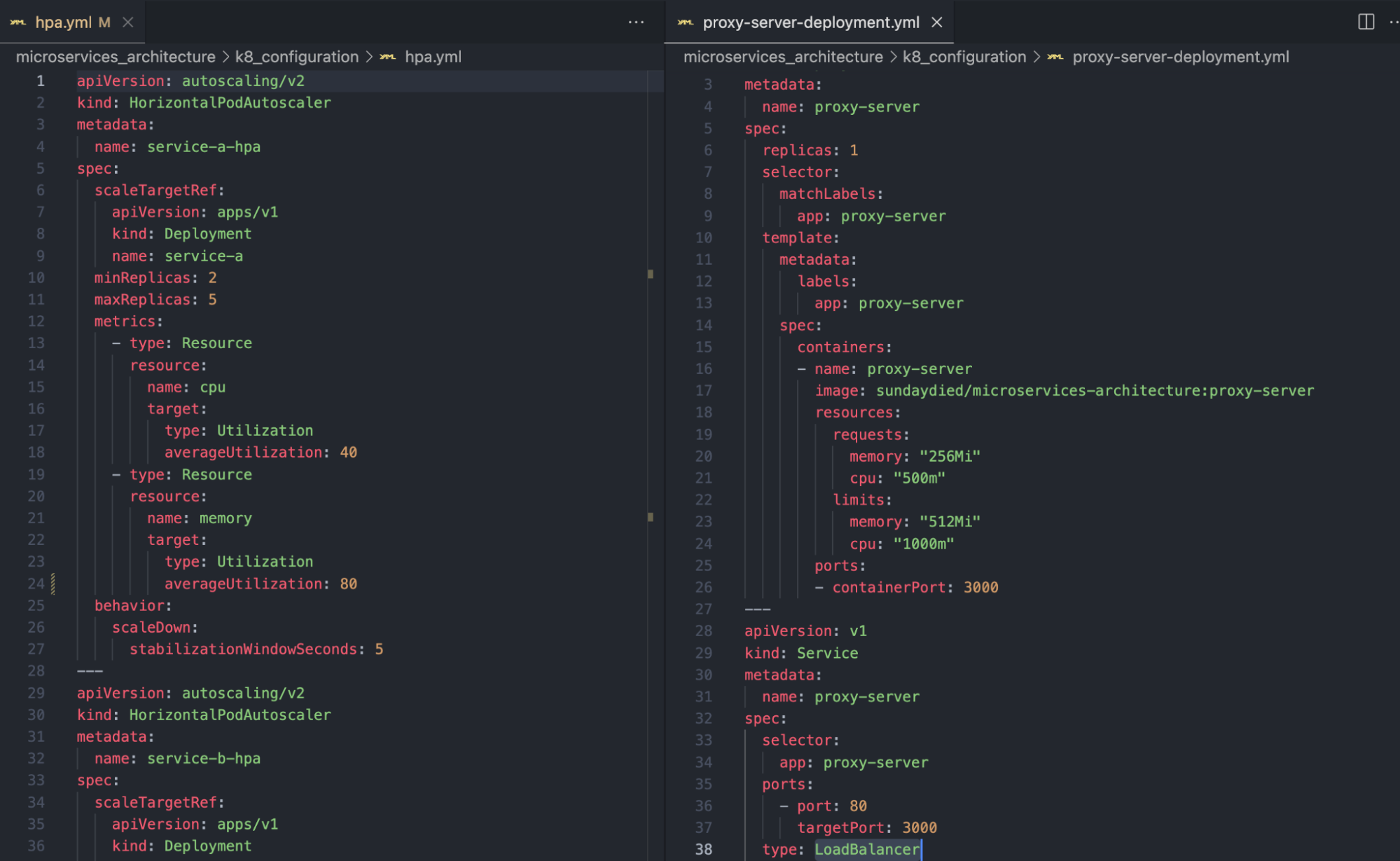

Kubernetes configuration files:

- deployment.yml: Indicating, pod size for each service.

- hpa.yml: Independent scaling criteria for individual service proxy-server-deployment.yml: Allocation sufficient resources to the proxy server and making it load balancer type.

4.2.Initial state: before loading test:-

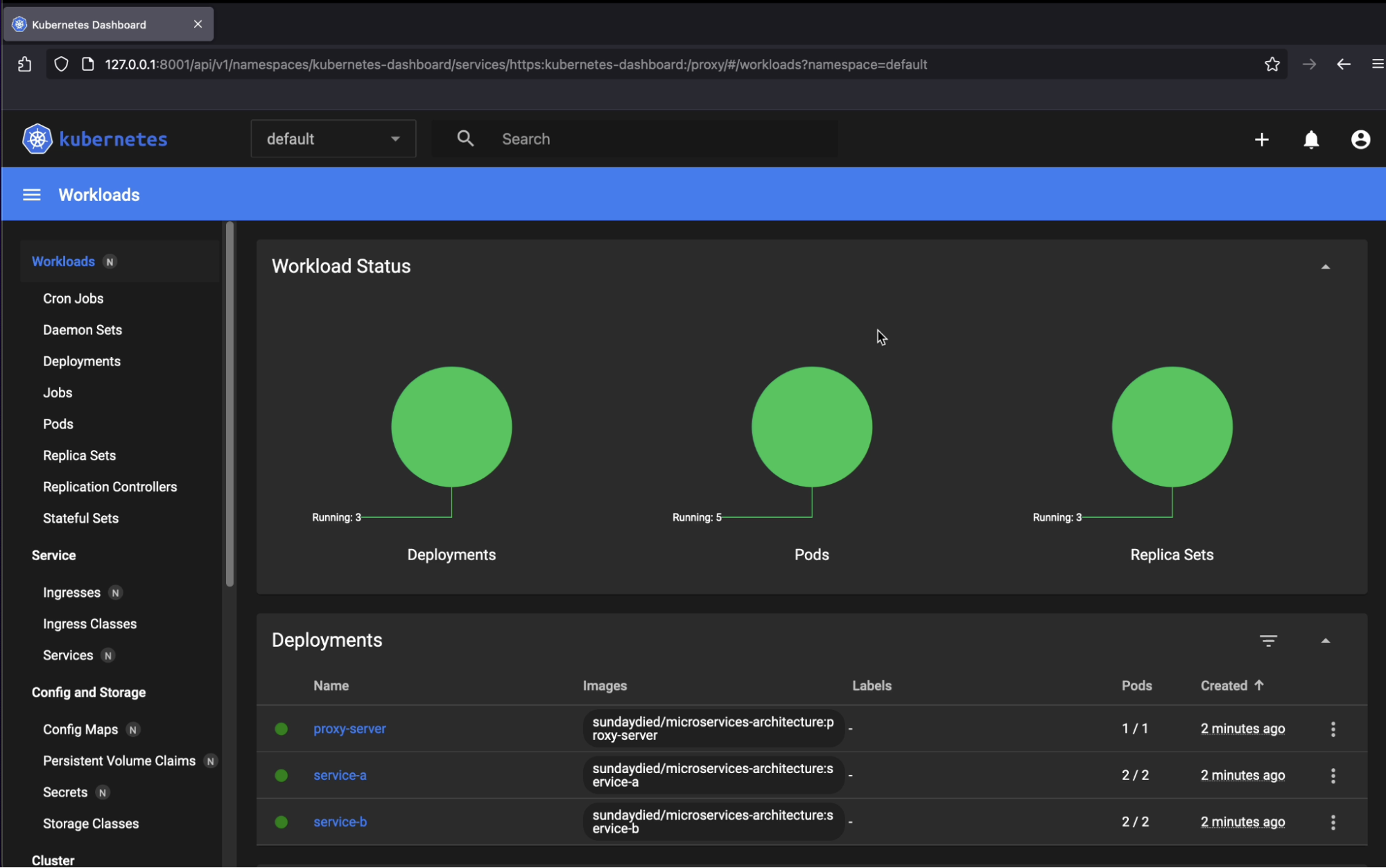

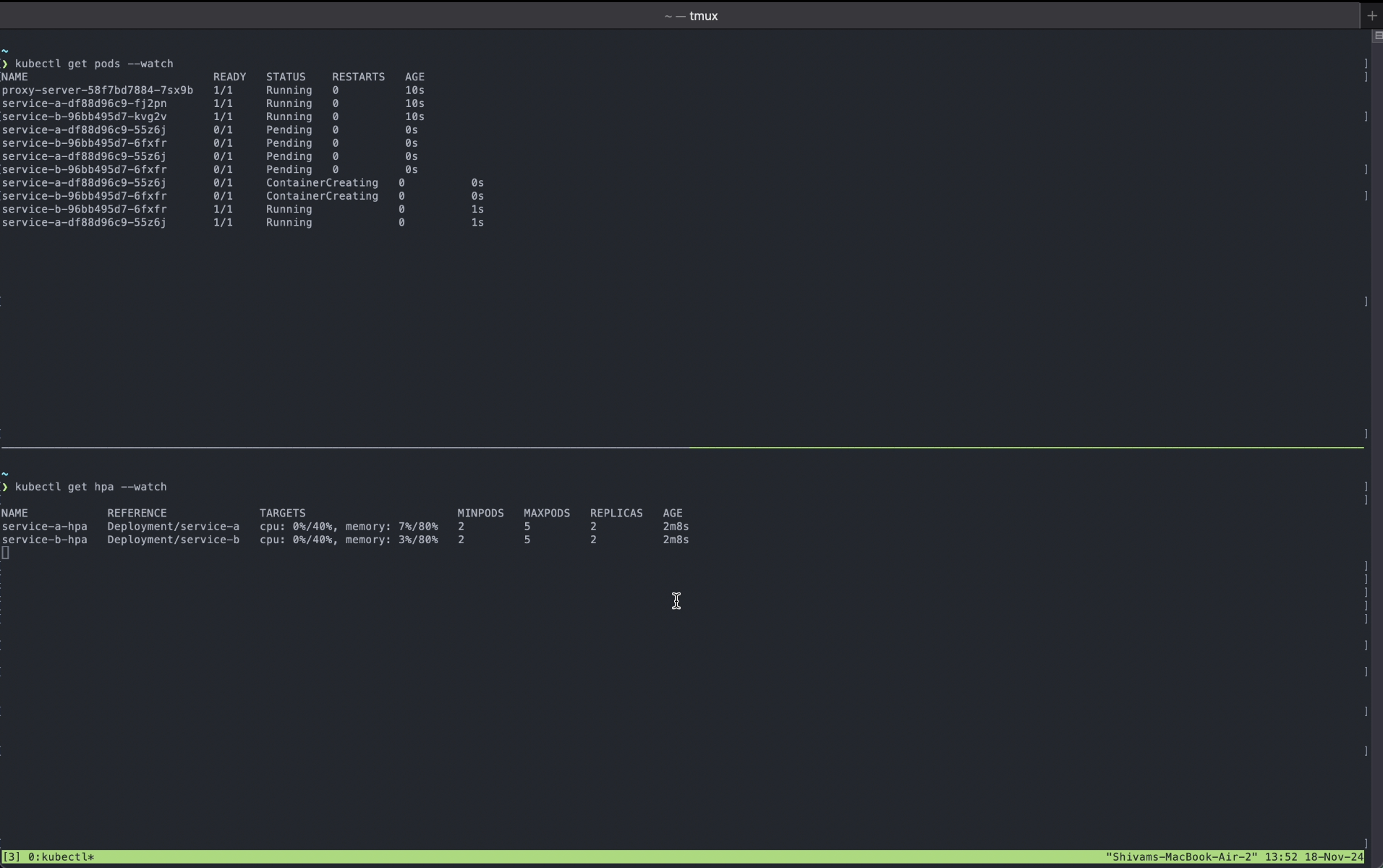

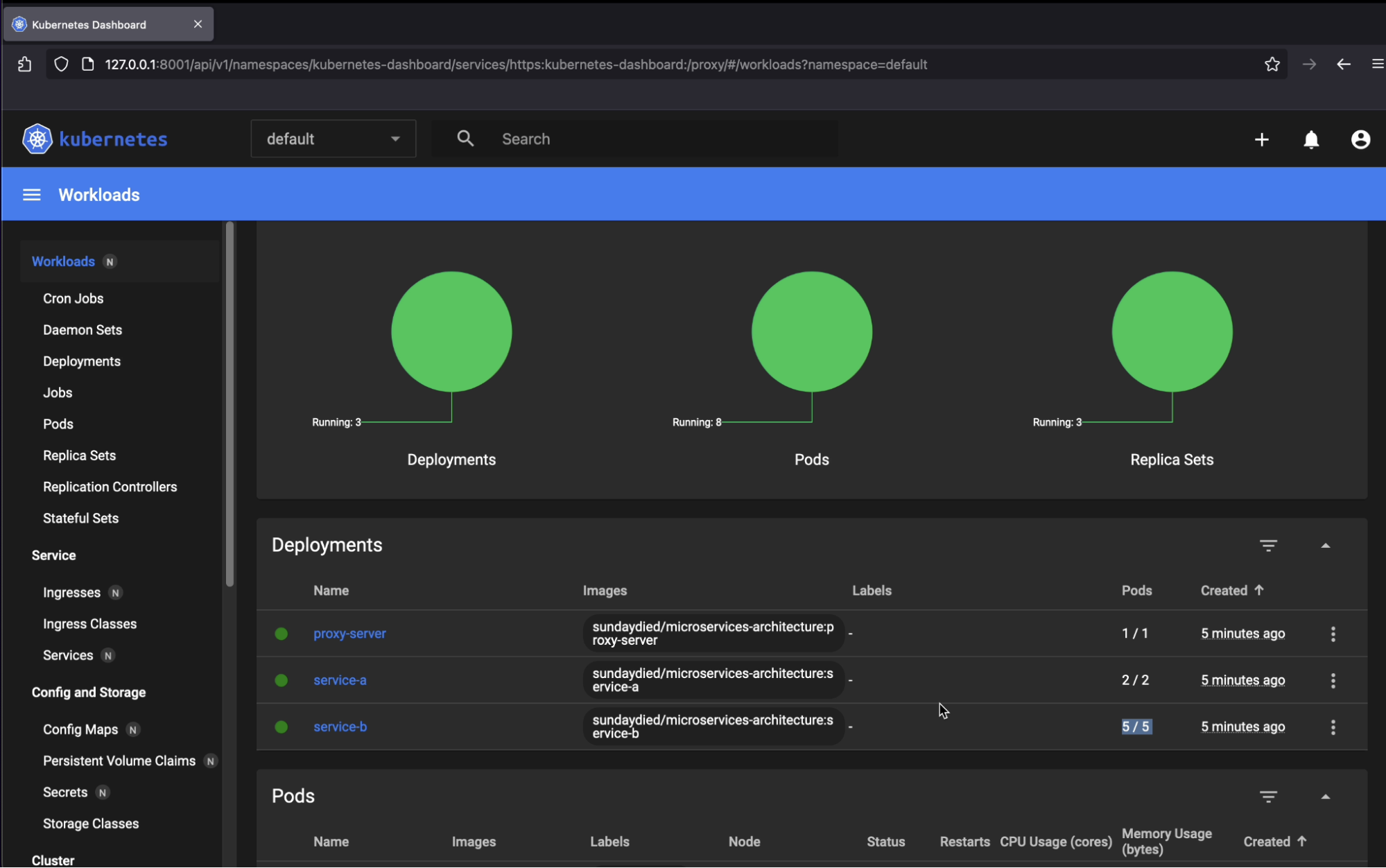

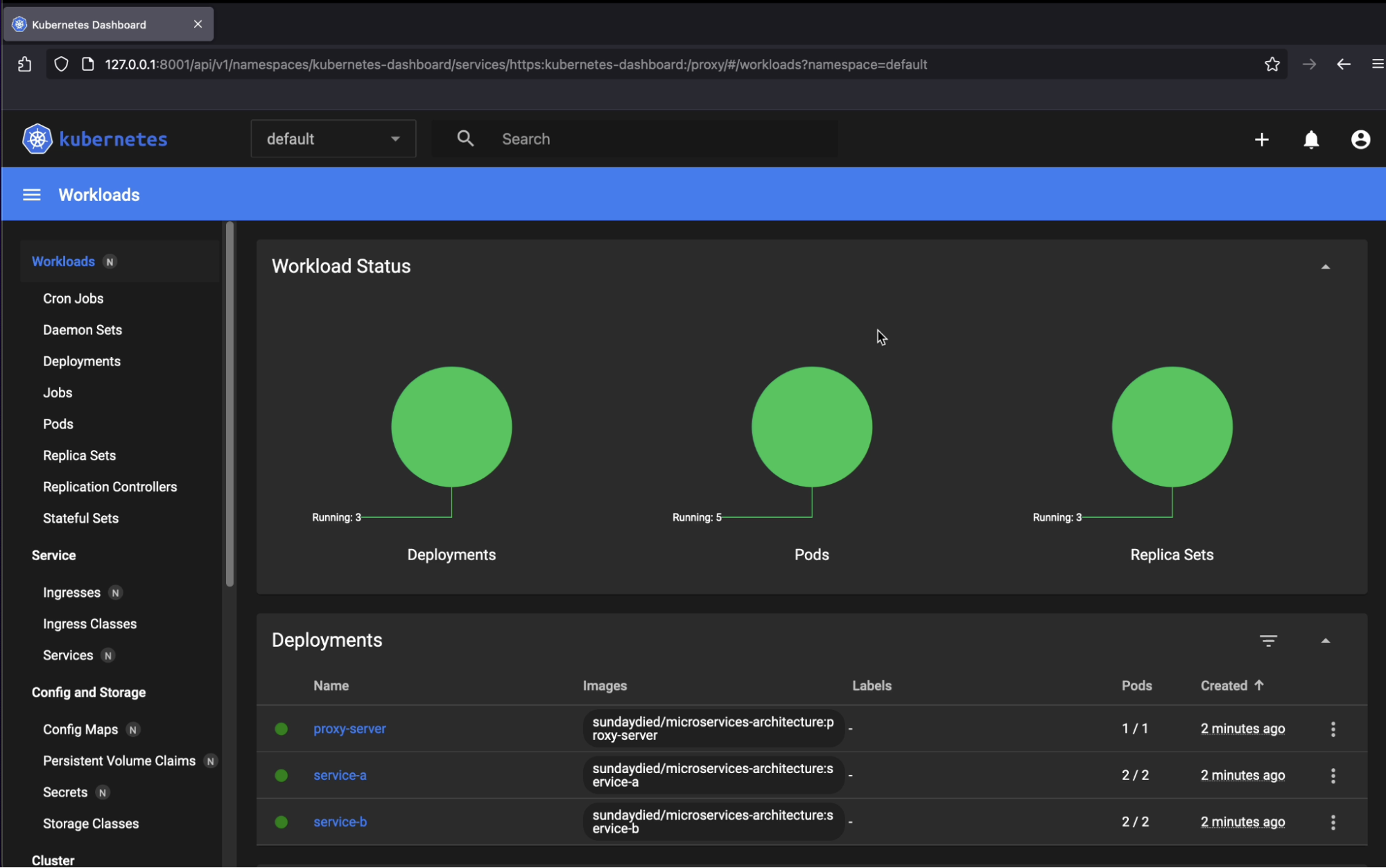

Total 5 pods:- 1 replica of proxy server, 2 replicas of pod of service A, 2 replicas of pod of service B

k8 Dashboard: Initial State

k8 Terminal: Initial State

4.3. Stress state: during loading test

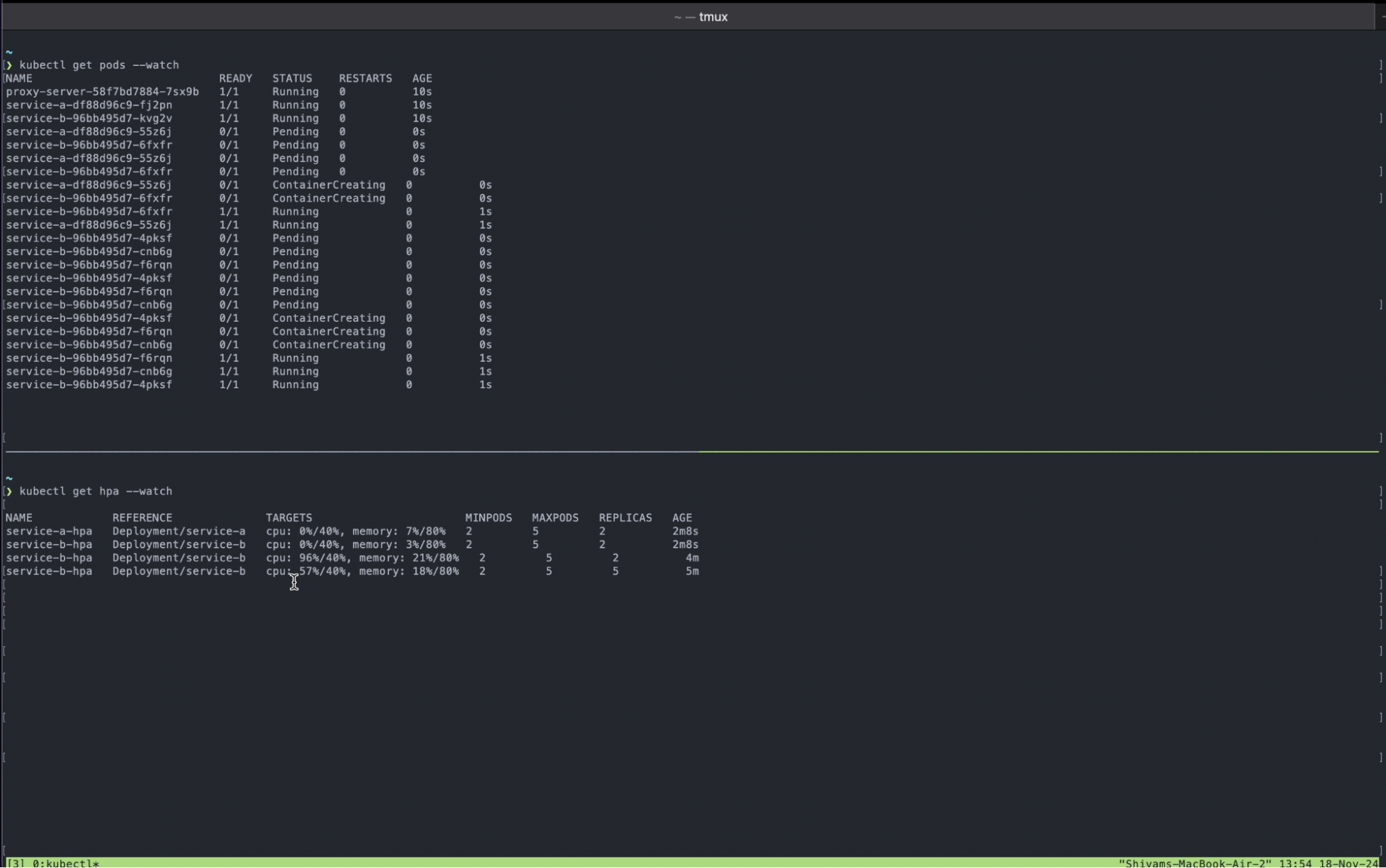

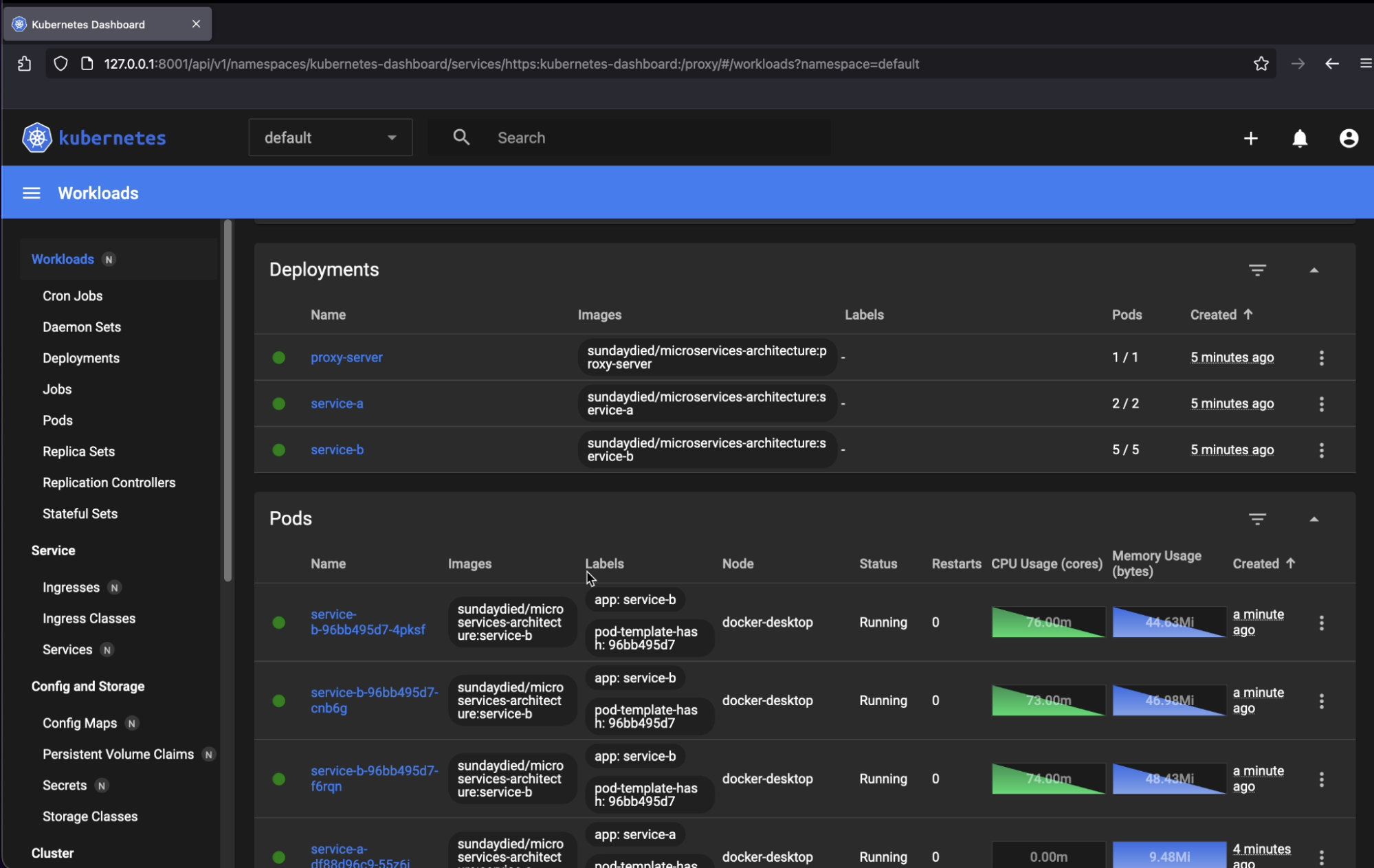

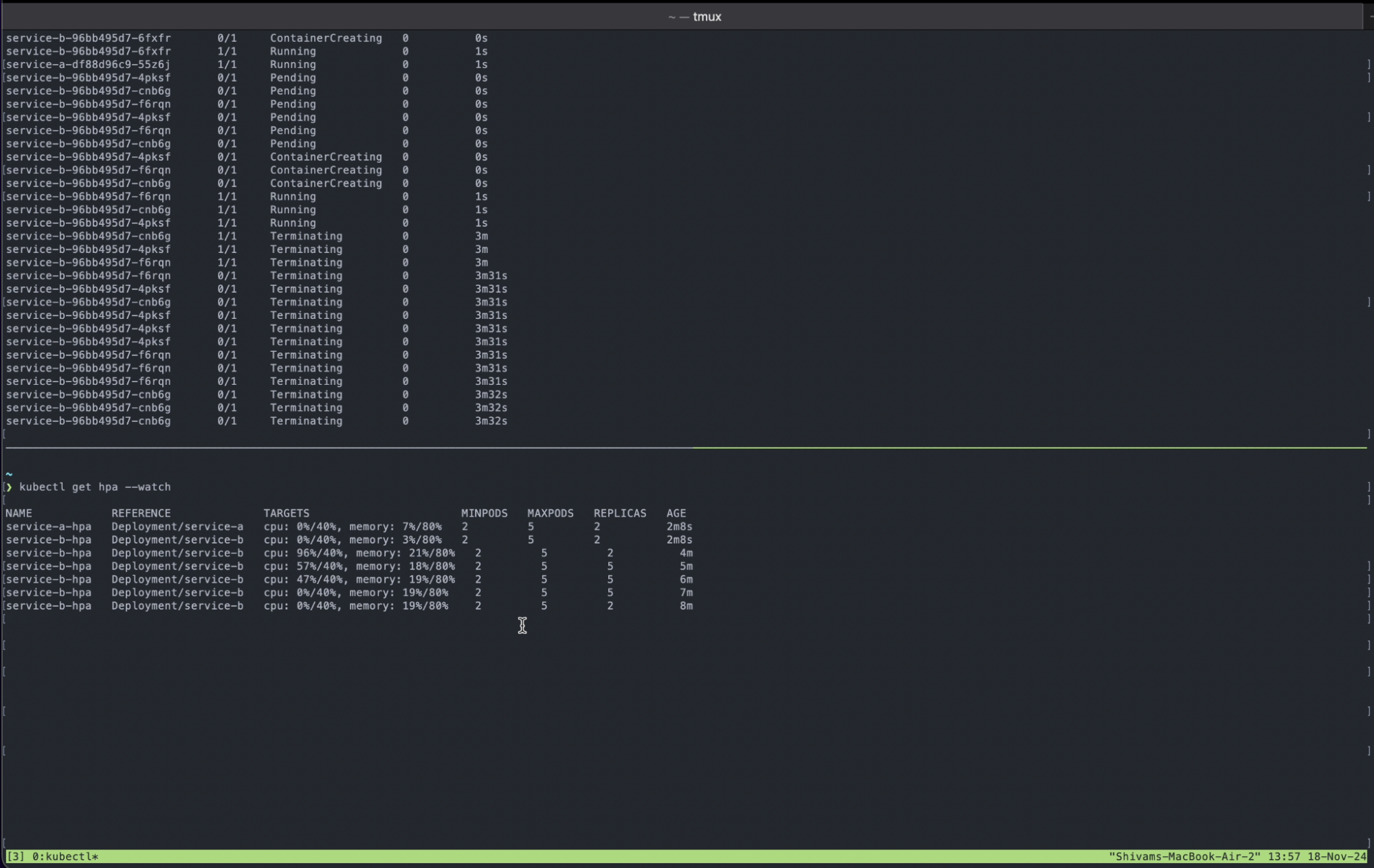

Starting loading test on http://localhost:80/service_b So this should spin up more replicas only of service b pods, unlike the full application in the monolithic approach.

5 out of 5 replicas of service B have been spun, not of service A nor the full application or proxy server.

k8 Dashboard: Stress State

k8 Terminal: Stress State

Load balance distributing the load

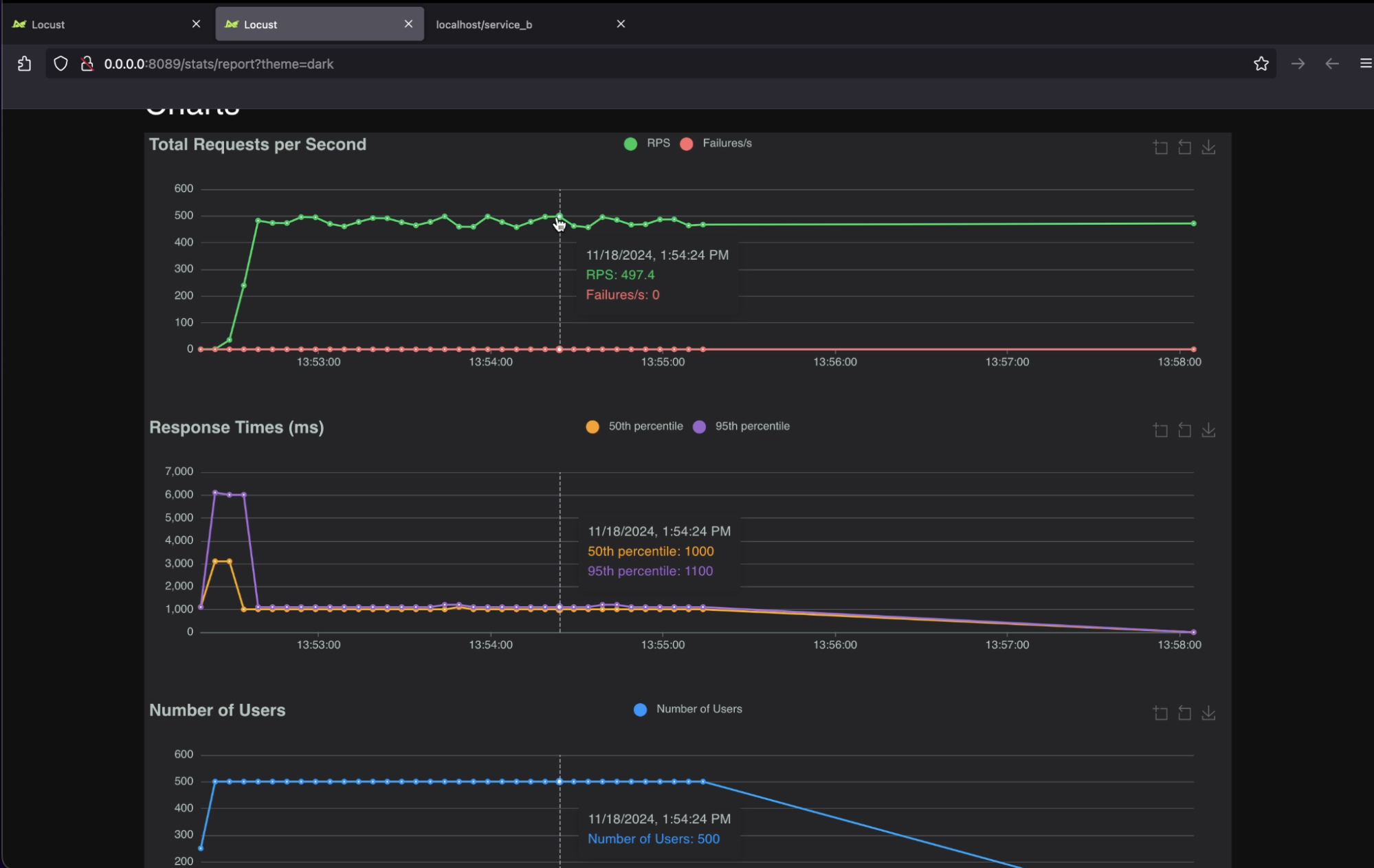

4.4. Final state: after loading test

k8 Dashboard: Final State

k8 Terminal: Final State

Locust report result

5. Monolithic vs Microservices:-

I obviously know, how complicated an actual application might be, especially when a database is involved, because of simultaneous disk I/O operations, resulting in data inconsistency, race conditions, when multiple services access the same resources, message queuing, etc. But this was my attempt to explore k8.

| Monolithic | Microservices |

|---|---|

| All services tightly coupled | Services loosely coupled |

| Full application is packaged in a pod | Each service gets its own pod |

| Easy to implement | Challenging to implement |

| Even if the load increases at a particular endpoint/service, the full application is scaled up. | Only the particular service is scaled up at which the load is increasing |

| Higher cost | Lower cost |

Seeking full-time SWE roles starting May 2025, please contact me at [email protected] if you have any leads.

| Type | Details |

|---|---|

| [email protected] | |

| Github Repository | https://github.com/nuttysunday/cool-kubernetes |

| Website | https://shivam.foo/ |

| Blog | https://shivam.foo/blogs/cool-kubernetes |

| Github Profile | https://github.com/nuttysunday |

| Twitter Profile | https://x.com/sundaycide |

| Resume | View Resume |